Background and history

In 2015, people working at 18F noticed their colleagues using the word “guys” to describe groups of people. An effort began to consider ways to nudge colleagues into using more inclusive language. The effort’s goal was not to shame or penalize anyone, but to encourage everyone to be more thoughtful and intentional with their words. The result was a simple customization of our internal Slack chat’s Slackbot auto-response: whenever it saw the word “guys,” it would respond and ask if the person meant something else, such as “friends” or “y’all.”

Telling the stories of our work through avenues such as our blog is an important way we adhere to our core values like working in the open and scaling our impact beyond those we are fortunate enough to partner with directly. This is one example, among many, of our internal practices that have helped us grow as an organization.

Over the years, the bot’s automatic nudge became known informally as “guys bot,” and it stuck with us, dutifully doing its job until we made some changes to it in 2020.

Shortcomings and iteration

Over the years, we observed that our “guys bot” was far from perfect. The bot would respond publicly, and some people felt that a public call-out was shaming. It was absolutist, responding to any type of ‘guys’ reference, which meant it flagged some language accidentally, like conversations about businesses or shows with the word “guy” in their names or even discussions about the bot itself. The bot offered suggestions that relied on cultural references, which were confusing to those not familiar with those particular movies or songs.

At the time of the bot’s development, Slack’s built-in Slackbot offered few customization options, so we could not address the accidental/erroneous responses. TTS has a separate custom Slack bot called Charlie, which provides a variety of interactive functionality, such as looking up government codes used to identify offices, reminding people to fill out their timesheets, and scheduling random teammates for virtual coffees to build team cohesion and community. With Charlie’s flexibility, we realized we could begin addressing the erroneous responses from “guys bot” by migrating it from a built-in Slack auto-response to a Charlie behavior.

In 2019, the TTS Diversity Guild began gathering feedback and building an outline of what a brand new, less limited “guys bot” might look like. The “guys bot” was ported entirely to Charlie in 2020, and we collaboratively refined its behavior. Initially, Charlie was provided with a list of phrases to ignore, so lunch plans or afternoon tea breaks wouldn’t prompt nudges. We also taught Charlie to ignore the word “guys” in quotes, so we could talk about the bot without triggering the bot. Pretty soon, we realized that we could introduce a lot more language nudges than just “guys,” and we renamed “guys bot” to “Inclusion Bot.”

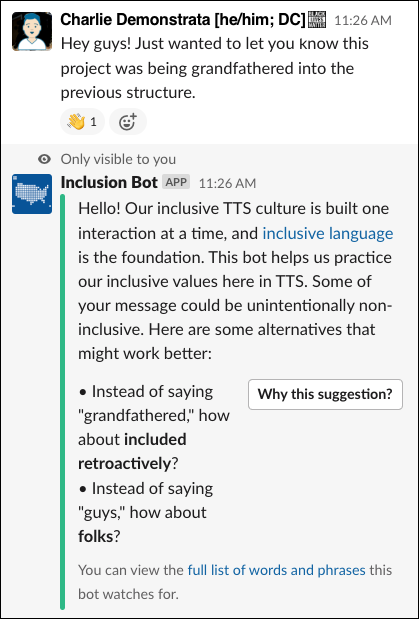

With Inclusion Bot now in service, we quickly began to get feedback. People still sometimes felt called out, and they were often unsure why the bot was suggesting different language. To help with these concerns, we updated the bot so that only the person who triggers it will see a response. The Inclusion Bot also adds an emoji to the original statement, signifying that it has responded:

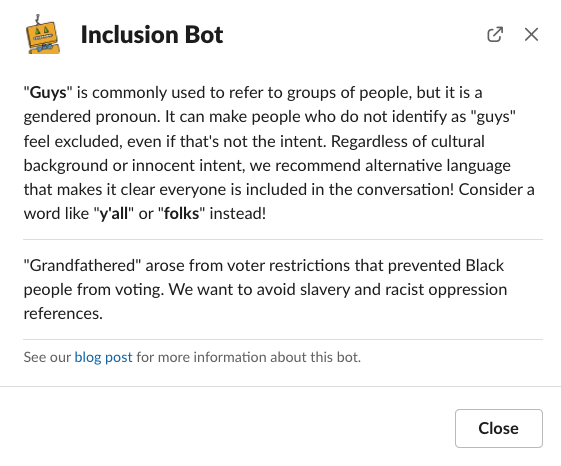

Additionally, we added a button that users can click to get more information about why the highlighted words or phrases can be problematic:

Why it matters to TTS

The TTS Handbook lists inclusion as our top value, and the original bot was inspired by our desire to increase everyone’s sense of belonging by not using a gendered term to refer to broad groups of people. The Inclusion Bot furthers that goal, and even reflects this critical organizational value right in its name. As our Code of Conduct states:

We strive to create a welcoming and inclusive culture that empowers people to provide outstanding public service. That kind of atmosphere requires an open exchange of ideas balanced by thoughtful guidelines.

Inclusion Bot is a tool that we use to help us find and maintain that balance by gently letting us know when we use language that can be hurtful or un-inclusive, without being intrusive or stopping a conversation. We want to create an environment where everyone feels safe to contribute, and that means being mindful of how we say things.

Learning about inclusive and caring language is important, but it can also be difficult. The bot makes this a little easier by educating us at the moment we use potentially hurtful language, and teaching us about words and phrases that many of us didn’t know have troubling roots, such as “grandfathering.”

Where we are now

With a few years of iteration on Inclusion Bot in our pocket, we now have a public, open source list of the words and phrases the bot is listening for and an active community contributing to the list, refining not just what language we want to be thoughtful about but also how we want to educate ourselves about that language. Anyone (including you!) can make suggested changes directly to the word list through the GitHub repository. Once changes have been approved and merged, the additions will appear in the bot immediately. The goal is to make maintaining and modifying the bot as accessible and inclusive as possible, in the spirit of the bot itself.

We’ve also spent more time thinking about automated responses and reconsidering how people engage with them. We learned that newcomers to TTS often perceived bot messages as potentially punitive. To help address this, we now introduce new staff to Inclusion Bot as part of their initial onboarding. We want everyone to understand and value the bot as one tool to help us build a thoughtful, inclusive organization.

Inclusion Bot is not a language policing tool, nor is it a disciplinary tool. The bot cannot understand context – it is a blunt instrument. As a result, we offer it as a tool to help TTS staff identify accidental non-inclusive or hurtful language. It is meant to help us learn. Intentionally harmful or exclusionary language is a matter for management to address, not a bot.

Future

TTS is an iterative organization, and the Inclusion Bot will continue to evolve, as it reflects changes in language. We’ll continue to research and observe how people engage with and understand the bot. And we always welcome feedback, even from people who aren’t in TTS! Do you have ideas on how we could make the bot even better? Feel free to share them with us on our GitHub discussions!